This is only for advanced users! Incorrect settings can result in poor rendering performance and/or crashes!

Table Of Contents

- Introduction

- How Redshift Partitions Memory

- The Memory Options

- Automatic Memory Management

- Percentage of GPU memory to use

- GPU memory inactivity timeout

- Irradiance Point Cloud Working Tree Reserved Memory

- Irradiance Cache Working Tree Reserved Memory

- Percentage Of Free Memory Used For Texture Cache / Maximum GPU Texture Cache Size

- Maximum CPU Texture Cache Size

- Ray Reserved Memory

- Adjusting Irradiance Point Cloud Or Irradiance Cache Reserved Memory

- Adjusting Texture Cache Memory

- Increasing Ray Reserved Memory

Introduction

Redshift supports a set of rendering features not found in other GPU renderers on the market such as point-based GI, flexible shader graphs, out-of-core texturing and out-of-core geometry. While these features are supported by most CPU biased renderers, getting them to work efficiently and predictably on the GPU was a significant challenge!

One of the challenges with GPU programs is memory management. There are main two issues at hand: First, the GPU has limited memory resources. Second, no robust methods exist for dynamically allocating GPU memory. For this reason, Redshift has to partition free GPU memory between the different modules so that each one can operate within known limits which are defined at the beginning of each frame.

Some CPU renderers also do a similar kind of memory partitioning. You might have seen other renderers refer to things like "dynamic geometry memory" or "texture cache". The first holds the scene's polygons while the second holds the textures. Redshift also uses "geometry memory" and "texture cache" for polygons and textures respectively.

Additionally, Redshift needs to allocate memory for rays. Because the GPU is a massively parallel processor, Redshift constantly builds lists of rays (the 'workload') and dispatches these to the GPU. The more rays we can send to the GPU in one go, the better the performance is. For example, a 1920x1080 scene using brute-force GI with 1024 rays per pixel needs to shoot a minimum of 2.1 billion rays! And this doesn't even include extra rays that might be needed for antialiasing, shadows, depth-of-field etc. Having all these rays in memory is not possible as it would require too much memory so Redshift splits the work into 'parts' and submits these parts individually – this way we only need to have enough memory on the GPU for a single part.

Finally, certain techniques such as the Irradiance cache and Irradiance Point cloud need extra memory during their computation stage to store the intermediate points. How many points will be generated by these stages is not known in advance so a memory budget has to be reserved. The current version of Redshift does not automatically adjust these memory buffers so, if these stages generate too many points, the rendering will be aborted and the user will have to go to the memory options and increase these limits. In the future, Redshift will automatically reconfigure memory in these situations so you don't have to.

How Redshift Partitions Memory

From a high-level point of view the steps the renderer takes to allocate memory are the following:

- Get the available free GPU memory

- If we are performing irradiance cache computations or irradiance point cloud computations, subtract the appropriate memory for these calculations (usually a few tens to a few hundreds of MB)

- If we are using the irradiance cache, photon map, irradiance point cloud or subsurface scattering, allocate space for that memory

- Allocate ray memory

- From what's remaining, use a percentage for geometry (polygons) and a percentage for the texture cache

The Memory Options

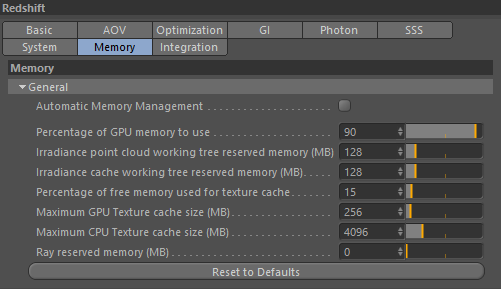

Inside the Redshift rendering options there is a "Memory" tab that contains all the GPU memory-related options. These are:

Automatic Memory Management

This setting will let Redshift analyze the scene and determine how GPU memory should be partitioned between rays, geometry and textures. Once this setting is enabled, the controls for these are grayed out. We recommend leaving this setting enabled, unless you are an advanced user and have observed Redshift making the wrong decision (because of a bug or some other kind of limitation).

Percentage of GPU memory to use

By default, Redshift reserves 90% of the GPU's free memory. This means that all other GPU apps and the OS get the remaining 10%. If you are running other GPU-heavy apps during rendering and encountering issues with them, you can reduce that figure to 80 or 70. On the other hand, if you know that no other app will use the GPU, you can increase it to 100%.

Please note that increasing the percentage beyond 90% is not typically recommended as it might introduce system instabilities and/or driver crashes!

GPU memory inactivity timeout

This setting was added in version 2.5.68. Previously, there were cases where Redshift could reserve memory and hold it indefinitely.

As mentioned above, Redshift reserves a percentage of your GPU's free memory in order to operate. Reserving and freeing GPU memory is an expensive operation so Redshift will hold on to this memory while there is any rendering activity, including shaderball rendering. If rendering activity stops for 10 seconds, Redshift will release this memory. It does this so that other 3d applications can function without problems.

Irradiance Point Cloud Working Tree Reserved Memory

This is the "working" memory during the irradiance point cloud computations. The default 128MB should be able to hold several hundred thousand points. This setting should be increased if you encounter a render error during computation of the irradiance point cloud. Please see below.

Irradiance Cache Working Tree Reserved Memory

This is the "working" memory during the irradiance cache computations. The default 128MB should be able to hold several hundred thousand points. This setting should be increased if you encounter a render error during computation of the irradiance cache. Please see below.

Percentage Of Free Memory Used For Texture Cache / Maximum GPU Texture Cache Size

Once reserved memory and rays have been subtracted from free memory, the remaining is split between the geometry (polygons) and the texture cache (textures). The "Percentage" parameter tells the renderer the percentage of free memory that it can use for texturing.

Example:

Say we are using a 2GB videocard and what's left after reserved buffers and rays is 1.7GB. The default 15% for the texture cache means that we can use up to 15% of that 1.7GB, i.e. approx 255MB. If on the other hand, we are using a videocard with 1GB and after reserved buffers and rays we are left with 700MB, the texture cache can be up to 105MB (15% of 700MB).

Once we know how many MB maximum we can use for the texture cache, we can further limit the number using the "Maximum Texture Cache Size" option. This is useful for videocards with a lot of free memory. For example, say you are using a 6GB Quadro and, after reserved buffers and rays you have 5.7GB free. 15% of that is 855MB. There are extremely few scenes that will ever need such a large texture cache! If we didn't have the "Maximum Texture Cache Size" option you would have to be constantly modifying the "Percentage" option depending on the videocard you are using.

Using these two options ("Percentage" and "Maximum") allows you to specify a percentage that makes sense (and 15% most often does) while not wasting memory on videocards with lots of free mem.

We explain how/when this parameter should be modified later down.

Maximum CPU Texture Cache Size

Before texure data is sent to the GPU, they are stored in CPU memory. By default, Redshift uses 4GB for this CPU storage. If you encounter performance issues with texture-heavy scenes, please increase this setting to 8GB or higher.

Ray Reserved Memory

If you leave this setting at zero, Redshift will use a default number of MB which depends on shader configuration. However, if your scene is very lightweight in terms of polygons, or you are using a videocard with a lot of free memory you can specify a budget for the rays and potentially increase your rendering performance. That is explained in its own section below.

Adjusting Irradiance Point Cloud Or Irradiance Cache Reserved Memory

The only time you should even have to modify these numbers is if you get a message that reads like this:

Irradiance cache points don't fit in VRAM. Frame aborted. Please either reduce Irradiance Cache quality settings or increase the irradiance

cache memory budget in the memory options

Or

Irradiance point cloud doesn't fit in VRAM. Frame aborted. Please either increase the 'Screen Radius' parameter or the irradiance

point cloud memory budget in the memory options

If it's not possible (or undesirable) to modify the irradiance point cloud or irradiance cache quality parameters, you can try increasing the memory from 128MB to 256MB or 512MB. If you are already using a lot of memory for this and are still getting this message, this might be because the scene has a lot of micro-detail in which case it is advisable to consider using Brute-Force GI instead.

Adjusting Texture Cache Memory

When Redshift renders, a "

Feedback Display" window should pop up. This window contains useful information about how much memory is allocated for individual modules. One of these entries is "Texture".

That number reports the number of MB that the CPU had to send the GPU via the PCIe bus for texturing. Initially it might say something like "0 KB [128 MB]". This means that "your texture cache is 128MB large and, so far you have uploaded no data".

Redshift can successfully render scenes containing gigabytes of texture data. It can achieve that by 'recycling' the texture cache (in this case 128MB). It will also upload only parts of the texture that are needed instead of the entire texture. So when textures are far away, a lower resolution version of the texture will be used (these are called "MIP maps") and only specific tiles of that MIP map.

Because of this method of recycling memory, you will very likely see the PCIe-transferred figure grow larger than the texture cache size (shown in the square brackets). That's ok most of the time – the performance penalty of re-uploading a few megabytes here and there is typically not an issue.

However, if you see the "Uploaded" number grow

very fast and quickly go into several hundreds of megabytes or even

gigabytes, this might mean that the texture cache is too small and needs to be increased.

If that is the case, you will need to do one or two things:

- First try increasing the "Max Texture Cache Size". The default it 128MB. Try 256MB as a test.

- If you did that and the number shown in the Feedback window did not become 256MB, then you will need to increase the "Percentage Of Free Memory Used For Texture Cache" parameter. Try numbers such as 0.3 or 0.5

Increasing Ray Reserved Memory

On average, Redshift can fit approximately 1 million triangles per 60MB of memory (in the typical case of meshes containing a single UV channel and a tangent space per vertex). This means that even scenes with a few million triangles might still leave some memory free (unused for geometry).

That memory can be reassigned to the rays which, as was explained earlier, will help Redshift submit fewer, larger packets of work to the GPU which, in some cases, can be good for performance.

Determining if your scene's geometry is underutilizing GPU memory is easy: all you have to do is look at the Feedback display "Geometry" entry. Similar to the texture cache, the geometry memory is recycled. If your scene is simple enough (and after rendering a frame) you will see the PCIe-transferred memory be significantly lower the geometry cache size (shown in the square bracket). For example it might read like this: "Geometry: 100 MB [400 MB]". In this example, this means we can use the 300MB and reassign them to Rays.

The ray memory currently used is also shown on the Feedback display under "Rays". It might read something like "Rays: 300MB". So, in the memory options, we could make the "Ray Resevered Memory", approximately 600MB. I.e. add 300MB that our geometry is not using to the 300MB that rays are using.